Introduction to Generative Models#

In previous courses, we studied various deep learning models, including discriminative and generative models. This course focuses on generative models, explaining how they work and providing examples and implementations.

Discriminative vs. Generative Models#

Here are the definitions of discriminative and generative models:

Discriminative Model: A discriminative model aims to distinguish different types of data. During training, it uses input data \(X\) and their corresponding labels \(Y\). Its goal is to classify new input data. Examples: classification (images, text, sound), object detection, and segmentation.

Generative Model: A generative model learns the data distribution to generate new items similar to the training data. Unlike discriminative models, it does not use labels during training. So far, we have seen generative models in the NLP course 5.

Note: Autoencoders do not fit into either category, as they do not predict labels and do not learn the probability distribution of the input data. However, variational autoencoders, based on a similar architecture, can learn this distribution.

More formally, discriminative models learn the conditional probability \(P(X \mid Y)\), while generative models learn \(P(X)\) or \(P(X,Y)\) if there are labels.

Course Content#

This course presents the main families of generative models and provides implementations for each:

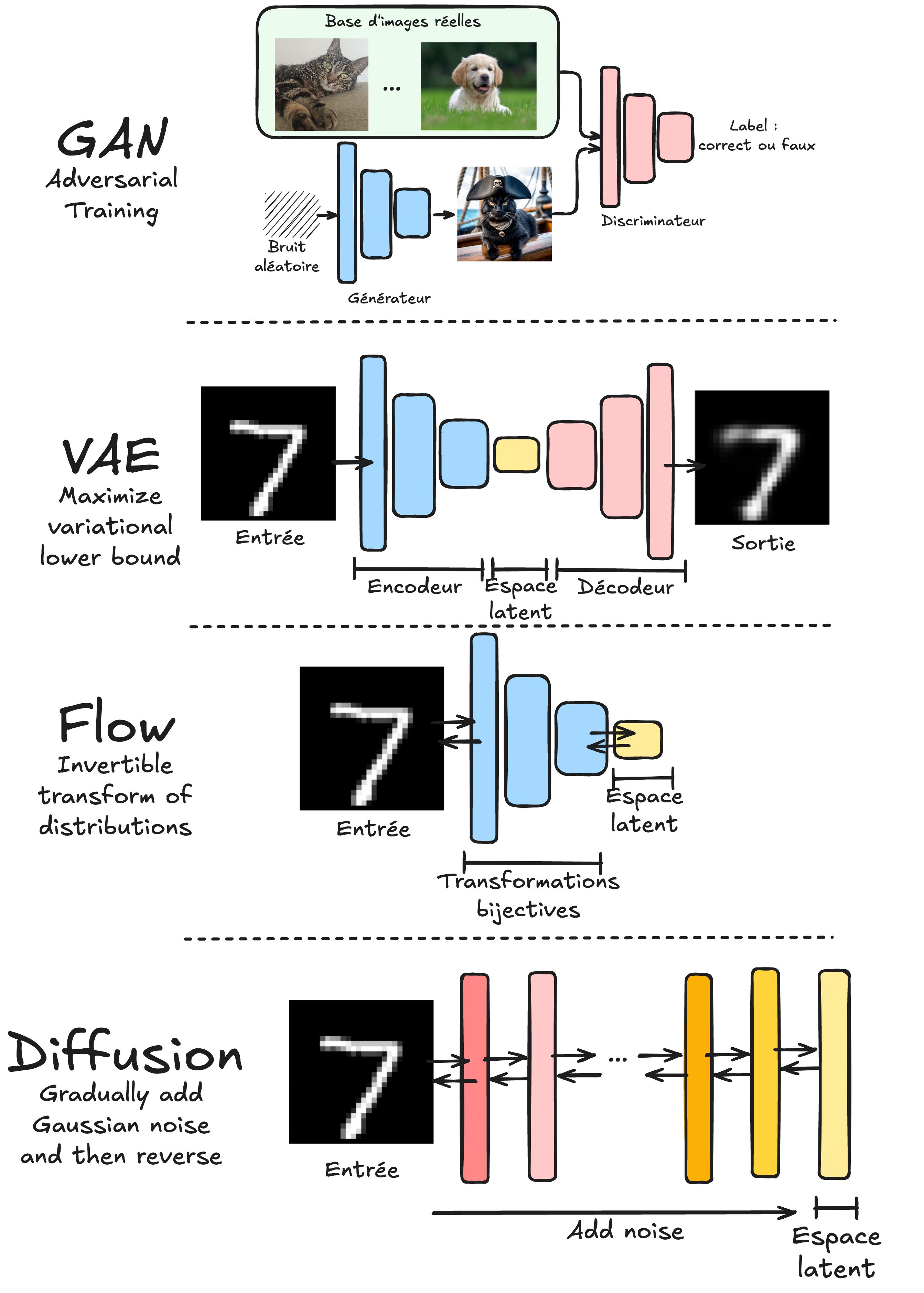

GAN: Notebooks 2 and 3 cover Generative Adversarial Networks. GANs work with two competing models: a generator that creates data similar to the training data distribution and a discriminator that distinguishes real data from generated data.

VAE: Notebooks 4 and 5 cover Variational Autoencoders. Unlike classical autoencoders, VAEs learn a probability distribution in the latent space rather than a deterministic representation.

Normalizing Flows: Notebooks 6 and 7 explore Normalizing Flows. These models use bijective transformations to transition from a simple distribution (like a Gaussian) to the training data distribution.

Diffusion Models: Notebooks 4 and 5 cover Diffusion Models. These models train a network to progressively reduce the noise in an image and are applied iteratively to generate an image from Gaussian noise.

Note: Autoregressive models (like GPT) are not covered here, as they were detailed in the NLP course 5.

Note 2: This course offers an introduction to generative models. To delve deeper, check out the Stanford CS236 course: course website and YouTube playlist.