Introduction to NLP#

What is NLP?#

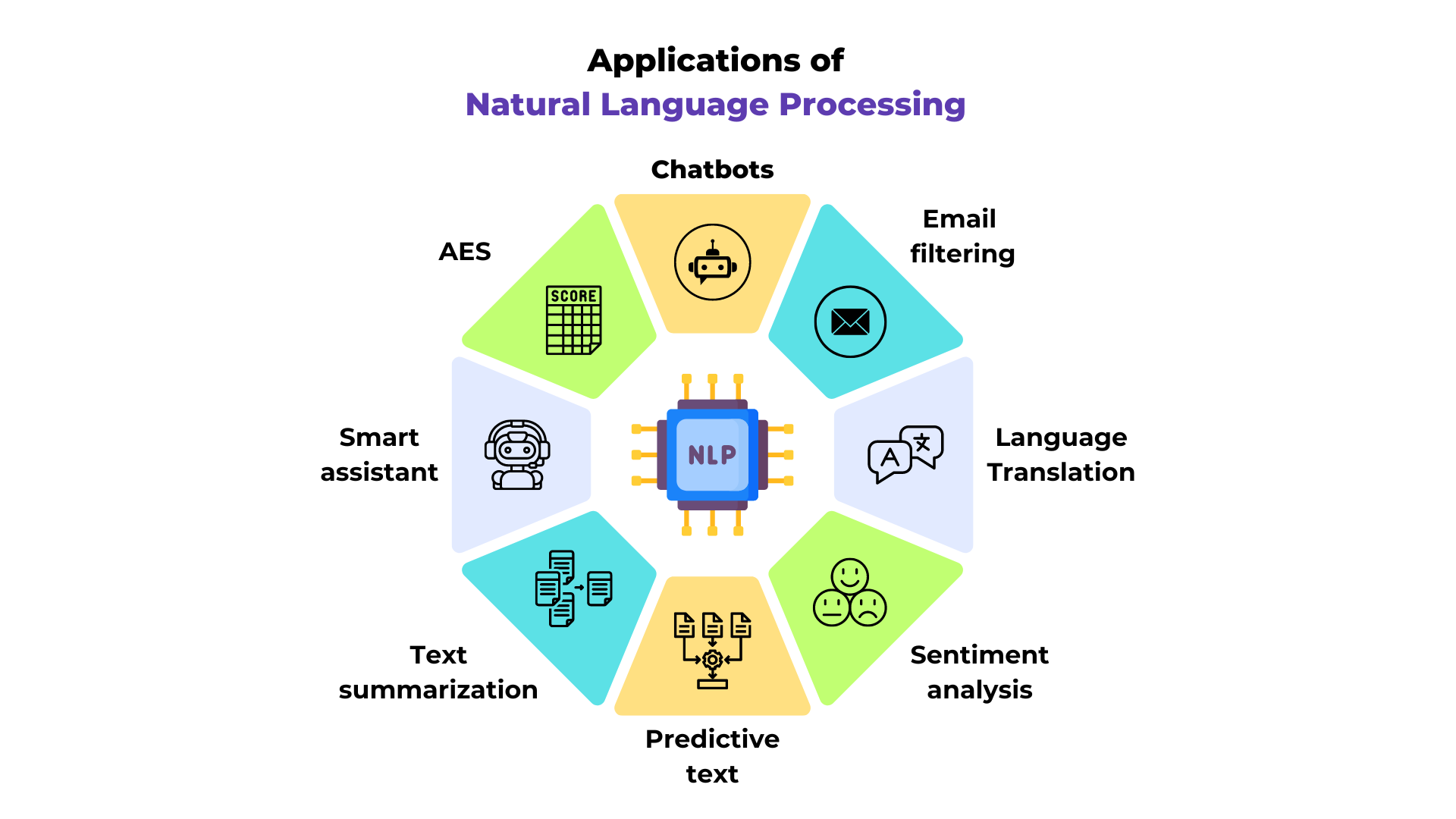

NLP, or Natural Language Processing, is a key field in machine learning. It encompasses various text-related tasks such as translation, text comprehension, question/answer systems, and many others.

Figure from the blog post.

NLP stands out in deep learning as it processes discrete data, typically read from left to right.

Course Content#

This course primarily focuses on predicting the next token, starting with predicting the next character for simplicity. This problem is fundamental to language models (such as GPT, Llama, Gemini, etc.).

The goal is to predict the next word based on the previous words, with a context that varies in size depending on the method and model’s power. The context is defined by the number of tokens (or words) used for this prediction.

What is a token? A token is an input element for the model. It can be a character, a group of characters, or a word, converted into a vector before being fed into the model.

Course Inspirations#

This course is largely inspired by the series of videos by Andrej Karpathy (GitHub repository link), particularly the “building makemore” lessons. Here, we offer a written and French version of these teachings. I encourage you to watch this video series, which is one of the best courses on language models available today (and free).

We will structure this course into several notebooks, with models of increasing difficulty. The idea is to understand the limitations of each model before moving on to a more complex one.

Here is the course outline:

Course 1: Bigram (classical method and neural networks)

Course 2: Next word prediction with a fully connected network

Course 3: WaveNet (hierarchical architecture)

Course 4: RNN (recurrent neural networks with sequential architecture)

Course 5: LSTM (improved recurrent network)

Note: The Course 7 on transformers addresses the same problem of generating the next character, but with a transformer architecture and a more complex dataset.

Note: The Course 7 on transformers addresses the same problem of generating the next character, but with a transformer architecture and a more complex dataset.

Retrieving the prenom.txt dataset#

In this course, we use a dataset of names containing approximately 30,000 of the most common names in France since 1900 (INSEE data). The prenoms.txt file is already present in the folder, so it is not necessary to run the code below. If you wish to do so, you must first download the nat2022.csv file from the INSEE website.

import pandas as pd

# Chargement du fichier CSV

df = pd.read_csv('nat2022.csv', sep=';')

# On enlève la catégorie '_PRENOMS_RARES' qui regroupe les prénoms peu fréquents

df_filtered = df[df['preusuel'] != '_PRENOMS_RARES']

# Pour compter, on fait la somme des nombres de naissances pour chaque prénom

df_grouped = df_filtered.groupby('preusuel', as_index=False)['nombre'].sum()

# On va trier les prénoms par popularité

df_sorted = df_grouped.sort_values(by='nombre', ascending=False)

# On extrait les 30 000 prénoms les plus populaires

top_prenoms = df_sorted['preusuel'].head(30000).values

with open('prenoms.txt', 'w', encoding='utf-8') as file:

for prenom in top_prenoms:

file.write(f"{prenom}\n")

In the next notebook, we will start by analyzing the dataset (distinct characters, etc.).